Manuscript accepted on : 04 February 2016

Published online on: 07-03-2016

Forensic Dentistry In Human Identification Using Anfis

R. Ranjanie, P.Muthu Krishnammal

Department of ECE, Sathyabama University, Chennai

DOI : http://dx.doi.org/10.13005/bbra/2073

ABSTRACT: Forensic dentistry convoluted the perception of people based on their dental records, chiefly available as radiograph images. Our target is to brutalize this process using image processing and pattern recognition techniques. Based on a given postmortem radiograph, we look for a match in a predefined database of antemortem radiographs in order to rehabilitation the closest match concerning some salient features. Image is passed through Gaussian filter in order to remove noise present in it and it is further improving the quality using adaptive histogram equalization. Radius vector function and support function are extracted and the features are classified using artificial neuro fuzzy interface system (anfis).

KEYWORDS: Gaussian filter; histogram equalization; feature extraction; anfis

Download this article as:| Copy the following to cite this article: Ranjanie R, Krishnammal P. M. Forensic Dentistry In Human Identification Using Anfis. Biosci Biotech Res Asia 2016;13(1) |

| Copy the following to cite this URL: Ranjanie R, Krishnammal P. M. Forensic Dentistry In Human Identification Using Anfis. Biosci Biotech Res Asia 2016;13(1). Available from: https://www.biotech-asia.org/?p=6965 |

Introduction

Now a day’s many factories, public offices, corporate offices prefer biometric labeling such as eye recognition, facial images, finger print,. These are successful in other cases but fail in case of fire accidents. This process is robust in identifying the true person. So we prefer to use biometric dental image processing for identification. The forensic odontology is also called as dental biometric used to identify individuals based on their dental characteristics. Untagged post-mortem dental radiographs are matched with labeled ante-mortem dental x-rays stored in a database to find the highest similarity. This dental image processing helps in finding the true person even though the full face/damage of full body is burnt in fire accident. The radiographic image is first identified and it is visualized using the image processing where the images features are extracted and a unique code is generated for the reference. The code of the extracted image is compared with the already stored database image. This comparison helps in identifying the true person when in case of fire accidents.

There are three types of dental radiographs (x-rays): bitewing, periapical and panoramic. In bitewing x-rays are generally taken during routine checkups and used for reviling cavities in teeth fig1: (a). Periapical X-rays show the entire tooth, counting the crown, root, and the bone surrounding the root fig1: (b). Panoramic X-rays provide information not only about the teeth, but also about lower and upper jawbones, sinuses, and alternate hard and soft tissues in the head and neck fig1: (c).

An individual’s dentition includes information about the number of teeth present, the orientation of those teeth, and dental rehabilitation. Each dental restoration is unique because it is designed specifically for that particular tooth. An individual’s dentition is defined by a combination of all these attribute, and the dentition can be used to separate one single from another. The information about dentition is represented in the form of dental codes and dental radiograph.

|

Figure 1: Three types of dental radiographs. (a) A bitewing radiograph; (b) a periapical radiograph; (c) a panoramic radiograph. |

Literature Review

Ayman Abaza, Arun Ross and Hany Ammar(2009), This method is to reduce the observant time of record to record equal by a factor of hundred. So we use the Eigen teeth method. The main aim of this method is to reduce the time for dental record retrieval reasonable accuracy. This approach depends on the element decrease using PCA. This method depends on dimension- amity reduction using PCA.

Dipali Rindhe and A.N. Shaikh (2013) are presented a paper based on shape extraction and shape matching technique. This technique is used for identification of individuals. That can be able to do in two ways. One technique is contour-based and the further one is skeleton based. One technique is contour-based and the other one is skeleton based. For shape extraction they used two methods. First one is called SBGFRLS method and another one is called skeletal representation. A shape matching is the desirable factor.

Deven N. Trivedi, Ashish m. Kothari and shingala nikunj (2014) presented a paper based on edge detection. This paper involves, for teeth contour they used image acquisition, enhancement and segmentation. The canny algorithm is used to detect the edges. The first step is image smoothing, this is used to remove noise from the image they used low pass filter or gaussian filter for removing noise. Then the next step is non maxima suppression then are applied the threshold for edge detection. The next stage is pre-processing in that size matching and shape matching are to be done. After pre-processing, the segmentation is taken in that the radiograph images are segmented in to region. In the segmentation process, the Filtering of noise to be done.

Martin L. Tangel and Chastine Fatichah (2013), presented the paper for dental classification for periapical x-rays placed on multiple fuzzy attribute, where each tooth is examined based on multiple standards such as area/perimeter ratio and width/height ratio. A group producers on special type of image called periapical radiograph is advised and allocation is done after speculative group (in case of ambiguous object), therefore a correct and benefit result can be accessed due to its efficiency to handle ambiguous tooth.

OmaimaNomir and MohamedAbdel-Mottaleb (2008) are introducing a paper based on the hierarchical chamfer algorithm. This algorithm is used for matching the teeth contours. These techniques involve feature extraction and teeth matching. During the process of retrieval, according to the matching distance between the AM and PM teeth. For different resolution, the technique uses the hierarchical edge matching algorithm. In feature extraction, the tooth’s contour is extracted and distance map is built for each AM tooth in the database. Then at teeth matching stage, the given PM image, and the distance are calculated between the AM tooth distance map and a PM tooth contour but at different resolution levels. At different resolution levels, the matching is performed. In the database, the edge curve pixels are deriving for all AM teeth. For each AM tooth, the distance map image is created. The teeth are first segmented in the given PM image.

Sunita Sood and Ranju Kanwar (2014) presented a paper for image thresholding before segmentation of teeth for analysis by using Otsu algorithm. The principal of Otsu algorithm is based on the minimum within class variance. If the threshold T is selected, then the image pixel with gray color intensity less than T is made zero and those greater than T are made one. These will produce the binary image with part of noisy pixels in the form of salt and pepper noise. Then the noise pixels are removed using salt and pepper algorithm. The binary images are branched into four sectors: top left, Top right, Bottom left and Bottom right. In every jaw has a fixed no. of teeth and detail about the missing teeth. Missing teeth can be found by the distance between the two teeth. If a tooth is vanished between two teeth, then the span between the same will be higher than the normal case.

Proposed Methodology

Dental biometrics is used in the forensic dentistry to identify individuals based on their dental characteristics. This dental image processing helps in finding the true person even though the full face/damage of full body is burnt in fire accident. The radiographic image is first identified and it is visualized using the image processing where the images features are extracted and a unique code is generated.

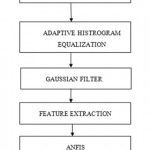

Some of the dental image records are already stored in the database by using ANFIS technique then the inputs are trained by the neural network in order to retrieve the closest match with respect to some salient features. Image is passed through Gaussian filter in order to remove noise present in it and it is enhanced using adaptive histogram equalization. Then the ANFIS (adaptive neural fuzzy inference system) is used for identification based on the available data. In the anfis they are already trained the data that are stored in this then the input images are compare with the anfis record. Then it will produce the result.

|

Figure 2: Block diagram for detection |

Reading Input Image

A = imread (filename, fmt) read a grayscale or color image from the file individual by the string filename. If the file is not present in the present folder, or in a folder on the MATLAB path, specify the full pathname.

The text string fmt defines the format of the file by its standard file extension. To see a list of supported formats, with their file expansion, use the imformats objective. If imread can not found in a file named filename, it looks for a file named filename.fmt.

Adaptive Histogram Equalization

In the Adaptive histogram equalization (AHE), the computer image processing process used to improve contrast in images. It is therefore suitable for improving the local contrast and enlarges the explanations of edges in each region of an image. The process of adjusting intensity values can be done naturally using histogram Equalization. Histogram equalization associate transfer the intensity values so that the histogram of the output image approximately test a described histogram. By neglect, the histogram equalization function, histeq, effort to match a plain histogram with 64 bins, but you can specify various histograms instead. It enhance on this by transforming each pixel with a transformation function derived from a neighborhood region. In its simplest form, each pixel is changed based on the histogram of a square adjacent the pixel.

Properties of AHE

The size of the closeness region is a parameter of the method. It establishes a characteristic length scale: contrast at smaller scales is enlarged, while variation at larger scales is reduced.

Due to the histogram equalization, the result value of a pixel under AHE is corresponding to its rank among the pixels in its neighborhood. This allows a productive execution on dedicated hardware that can compare the centre pixel with all other pixels in the neighborhood. An unnormalized result value can be computed by enumerating 2 for each pixel with a smaller value than the centre pixel, and adding 1 for each pixel with equal value.

Gaussian Filter

In the gaussian filter they are concentrated on peak values. The Gaussian smoothing operator is used to `blur’ images and eliminate element and noise. The mean filter is same as this, but it uses a different kernel that represents the outline of a Gaussian (`bell-shaped’) lump. Gaussian smooth is very effective for removing Gaussian noise. The weights provide higher importance to pixels close to the edge (reduce edge blurring).

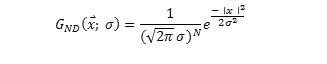

The Gaussian Kernel,

Feature Extraction

In machine learning, pattern detection and in image processing, it starts from an initial set of measured data and builds derived values (features) intended to be descriptive and non-redundant, further the consecutive learning and generalization steps, and in some cases leading to improve human analysis. Feature extraction is correlated to dimensionality reduction. When the input data to an algorithm is too large to be handled and it is uncertain to be unnecessary (e.g. the same measurement in both feet and meters), then it can be transformed into a reduced set of features. Feature extraction associates decreasing the number of resources required to define a large set of data.

SFTA (segmentation based fractal texture analysis):

Extract texture features from an image using the SFTA algorithm. The input images are decomposing in to a set of binary images in extraction algorithm. Those are fractal dimensions of the resulting regions are enumerated in order to define segmented texture template. The decomposition of input image is attained by the Two-Threshold Binary Decomposition (TTBD) algorithm that we also propose in this work. We evaluated SFTA for the function of content-based image retrieval (CBIR) and image classification, analyzing its act to that of other widely in use feature extraction methods. SFTA capable of higher accuracy for CBIR and image classification.

Proceeds a 1 by 6*nt vector D extract from the input gray scale image I using the SFTA (Segmentation-based Fractal Texture Analysis) algorithm. The element vector corresponds to texture information extracted from the input image I.

Function [D] = sfta (I, nt).

Adaptive Neural Fuzzy Inference System (ANFIS)

An anfis is the combination of neural network and fuzzy logic. It is used for system identification based on available data. This are class of adaptive network that are functionally equivalent to fuzzy interface system. It uses a hybrid learning algorithm that is it utilizes the training and learning neural network to find the parameters of a fuzzy system. ANFIS represent Sugenoe Tsukamoto fuzzy models. It consists of set of rules and predefined commands. An anfis is a kind of artificial neural network that is based on takagi-sugeno fuzzy interface system. It integrates both neural and fuzzy logic principles. Their interface systems correspond to a point of fuzzy IF-THEN rules that have learning efficiency to approximate nonlinear functions.

ANFIS is one of the best tradeoffs connecting neural and fuzzy systems. In the anfis, the input are trained and that are performed the classification then it will retrieve the correct match from the database. The membership functions parameters are tuned using a back propagation type technique. The parameters correlate with the membership functions will change through the learning process. The evaluation of these parameters is forward by gradient vector, which provides a measure of how well fuzzy inference system is creating the input/output data for a given set of parameter. Once the gradient vector is acquire, any of the various gain routines can be applied in order to regulate the parameters so as to diminish some error measure.

The ANFIS Editor GUI invoked using anfisedit (‘a’), opens the ANFIS Editor GUI from which you can implement anfis using a FIS structure stored as a file a.fis.

Artificial neural network (ANN)

Neural Network is a data organizing paradigm that is coruscated by the way biological nervous systems undertaken the information. In a neural network training model they are three layers. The nodes of the input layer are passive, that is they do not modify the data. They collect a single value on their input, and duplicate the value to their multiple outputs. In comparison, the nodes of the hidden layer and the output layer are active. The hidden layer performs the XOR operation.

Simulation Results

|

Figure 3: Output images |

Fig4 represents the neural networks of the results; in these we are using the epoch, time, performance and gradient, validation checks. Epoch means how many trainings it will take below the trainings we should get the output otherwise it will come the error. In gradient we are having the minimal loss function. It represents the how much loss in the function. Validation checks represent the how many hidden layers we are using for each image. Fig 5 represents the how the performance of the neural network.

|

Figure 4: Neural network image |

|

Figure 5(a): Performance analysis |

|

Figure 5(b): Performance analysis |

In the anfis editor, Fig 6 first we have import the file. In that file consists of set of dental input images. Then we have load the data that are already stored set of trained data in the anfis database Fig 7. Then generate FIS. Then generating, train the FIS (fuzzy inference system) and then test the FIS Fig 8

|

Figure 6: Import the file |

|

Figure 7: Loading ANFIS data |

|

Figure 8: Generating, training and testing FIS |

After training and testing the neural network using ANFIS algorithm with the dental images with respect to the target images which exist in database, the classification of dental images is done by comparing the images based on the features extracted. The classification of dental images is used for the recognition of person and security. The above course of action is done by using adaptive neural fuzzy inference system for efficient graphical user interface. Then it will compare with ANFIS database images then it will display which person.

Conclusion

The methods presented in the paper are tested for large number of images and trained by accurate values in neural network using anfis algorithm. The projected method is extracted the radiograph image by using SFTA (segmentation based fractal texture analysis). The input images are stored in the database and that images are compare by the anfis database. In the anfis database consist of already the images stored are extracted then it will compare with the input image. Then classify which person is it. That will display in the command window. The anfis method is very efficient for comparing the images.

References

- Amina Khatra,” Dental radiograph as human biometric identifier: an Eigen values/Eigen vector approach”, Cibtech Journal of Bio-Protocols ISSN: 2319–3840, 2013 Vol. 2 (3) September-December.

- Dipali Rindhe, Prof. A.N. Shaikh,” A Role of Dental Radiograph in Human Forensic Identification”, International Journal of Computer Science and Mobile Computing, Vol.2 Issue. 12, December- 2013.

- Deven N. Trivedi, Ashish m. Kothari, sanjay shah and shingala nikunj, “Dental Image Matching By Canny Algorithm for Human Identification” International Journal of advanced Computer Research (ISSN(print): 2249-7277 : 2277-7970) volume-4 numbr-4 Issuse-17 December-2014.

- Faisal Rehman, M.Usman Akram,” Human identification using dental biometric analysis“, IEEE 2015.

- Kalyani Mali, Samayita Bhattacharya,” Comparative Study of Different Biometric Features”, International Journal of Advanced Research in Computer and Communication Engineering Vol. 2, Issue 7, July 2013.

- Martin L. Tangel, Chastine Fatichah, Fei Yan, Kaoru Hirota“ Dental Classification for Periapical Radiograph Based On Multiple Fuzzy Attribute” IEEE 2013.

- Omaima Nomir, Mohamed Abdel-Mottaleb,”Combining Matching Algorithms for human identification using dental x-ray radiograph” 1-4244-1437- ©2007 IEEE.

- Omaima Nomir, Mohamed Abdel-Mottaleb,” Fusion of Matching Algorithms for Human Identification Using Dental X-Ray Radiographs” IEEE , VOL. 3, NO. 2, JUNE 2008.

- Rohit Thanki, Deven Trivedi,” Introduction of Novel Tool for Human Identification Based on Dental Image Matching”, International Journal of Emerging Technology and Advanced Engineering (ISSN 2250-2459, Volume 2, issues 10, oct 2012.

- Sunita Sood, Ranju Kanwar, Malika Singh, “Dental Bio-metrics as Human Personal Identifier using Pixel Neighborhood Segmentation Techniques” International Journal of Computer Applications (0975 – 8887) Volume 96– No. 11, June 2014.

- Samira Bahojb Ghodsi, Karim Faez, “A Novel Approach for Matching of Dental Radiograph Image Using Zernike Moment”, International Journal of Emerging Technology and Advanced Engineering (ISSN 2250-2459, Volume 2, issues 10,, dec 2012.

- Shubhangi Dighe, Revati Shriram,” Pre-processing, Segmentation and Matching of Dental Radiographs used in Dental Biometrics”, International Journal of Science and Applied Information Technology, Volume 1, No.2, May – June 2012.

(Visited 523 times, 1 visits today)

This work is licensed under a Creative Commons Attribution 4.0 International License.